Hybrid AI Is Inevitable. Workflow Discipline Is Not.

Here’s the uncomfortable truth about enterprise AI:

Two teams can use the same models, the same tools, and the same vendors—and end up with radically different cost, performance, and outcomes.

The difference isn’t the model.

It isn’t the GPU.

It isn’t the token price.

It’s the workflow.

That’s the part we’re still terrible at managing.

Hybrid AI isn’t a strategy choice. It’s an economic outcome.

Most enterprises don’t need a 480B parameter reasoning model running 24×7 on eight GPUs.

They need it:

-

occasionally

-

for bursty reasoning

-

at specific decision points

-

surrounded by far more mundane, repeatable work

Owning that capacity full-time makes no sense.

But pushing everything through token-based APIs doesn’t either.

So enterprises end up hybrid by default:

-

Local or controlled compute for repeatable, cost-sensitive execution

-

External reasoning services for intermittent, high-leverage thinking

This isn’t elegance.

It’s math.

It’s the same logic that produced hybrid cloud—predictable workloads stay close, elastic or specialized workloads burst out.

The hidden benefit of hybrid AI: avoiding operational drag

There’s a second reason enterprises drift hybrid that rarely gets said out loud:

Running reasoning models is operationally expensive.

Not just GPUs—

but lifecycle management, evaluation, prompt discipline, governance, and safety.

Most teams don’t want to operate reasoning.

They want to access it.

Hybrid AI lets enterprises use advanced reasoning without turning it into another platform they own.

That’s not laziness.

That’s focus.

The real problem hybrid AI creates: control

Here’s where things break down.

Token-based systems give you:

-

convenience

-

elasticity

-

speed to value

They do not give you:

-

saturation metrics

-

queue depth

-

backpressure

-

clear “add capacity now” signals

You don’t get an operator dashboard.

You get an invoice.

So when costs rise, the only knob left is:

“Use it less.”

That’s not control.

That’s abstinence.

And this is where AI cost management quietly becomes a developer workflow problem, not an infrastructure one.

A concrete example: my Tech Field Day analysis

In my TFD analysis project, I processed 230K segments to surface CTO priorities. The top concern wasn’t cost—it was risk and complexity. But getting there required using both tokens and GPUs intentionally.

I used token-based systems to:

-

create fine-tuning data

-

shape taxonomy

-

iterate on meaning

-

validate whether categories actually reflected CTO concerns

That work was human-bound.

Iteration speed mattered.

Tokens were the right economic tool.

But once the labels stabilized?

I moved the workload to GPUs.

Not because GPUs were “faster.”

Not because tokens failed.

Because continuing to use tokens at that stage would have been convenient—and economically irresponsible.

Token pricing is linear.

My workload was not.

At that point, tokens stopped accelerating thinking and started taxing repetition.

So I didn’t “use the system less.”

I moved the work.

That’s what operators do when metrics are missing.

This is why workflow education matters more than models

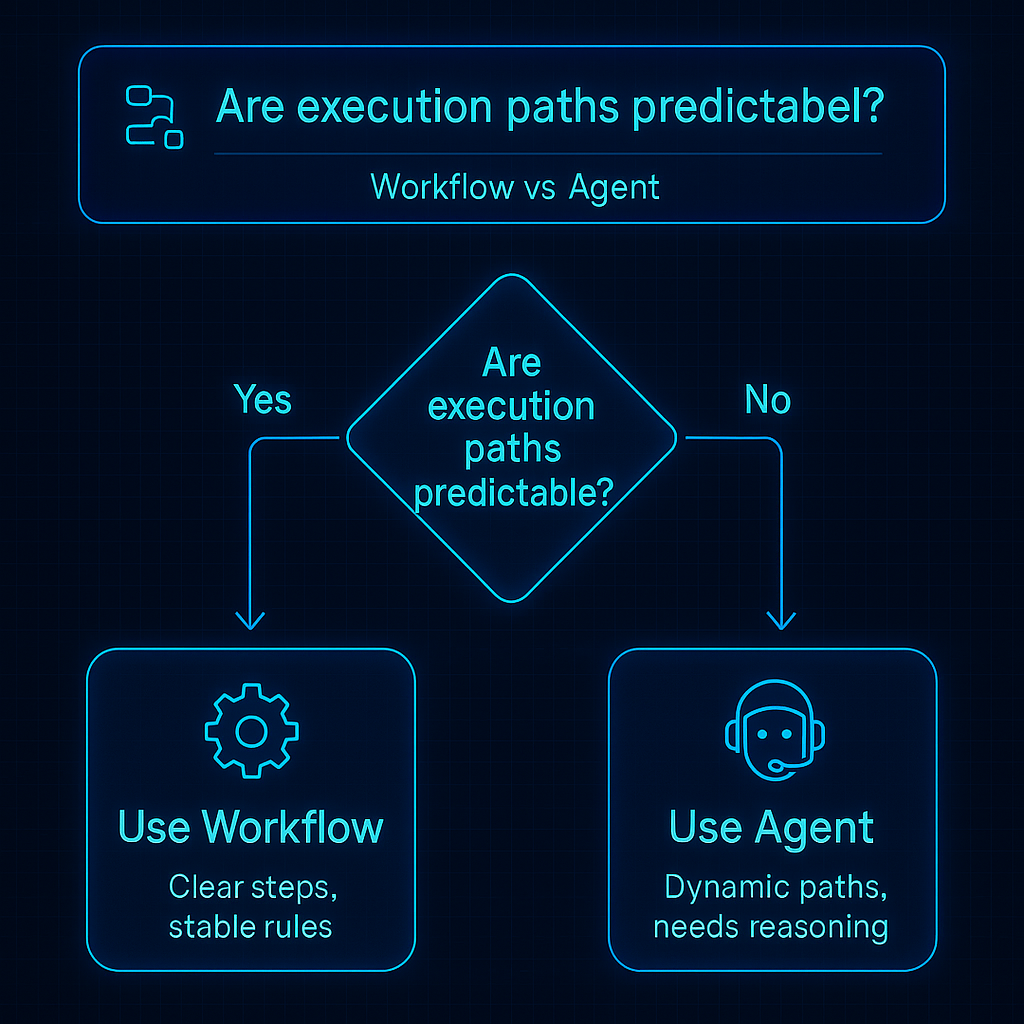

Hybrid AI fails when teams treat reasoning as a default instead of a deliberate escalation.

What actually determines cost and performance is:

-

when developers invoke reasoning

-

how often they iterate

-

what runs locally vs externally

-

how much rework exists in the loop

Two teams, same tools.

One burns budget.

One scales sustainably.

Same platform.

Different discipline.

Where this fits in the 4+1 model

This is why I keep coming back to Layer 2 in my 4+1 framework.

Not compute.

Not models.

Orchestration and workflow control.

Layer 2 is where decisions get routed:

-

“Think hard here”

-

“Run cheap here”

-

“Batch this”

-

“Don’t reason twice”

If developer workflows aren’t designed intentionally at that layer, no amount of GPU optimization or token negotiation will save you.

The takeaway (hard version)

Hybrid AI is inevitable.

Uncontrolled consumption is optional.

Until enterprises treat developer workflows as the primary control plane, AI cost management will stay reactive.

And “use it less” will keep showing up as a substitute for real operations.

That’s not a tooling failure.

That’s a workflow failure.

Share This Story, Choose Your Platform!

Keith Townsend is a seasoned technology leader and Founder of The Advisor Bench, specializing in IT infrastructure, cloud technologies, and AI. With expertise spanning cloud, virtualization, networking, and storage, Keith has been a trusted partner in transforming IT operations across industries, including pharmaceuticals, manufacturing, government, software, and financial services.

Keith’s career highlights include leading global initiatives to consolidate multiple data centers, unify disparate IT operations, and modernize mission-critical platforms for “three-letter” federal agencies. His ability to align complex technology solutions with business objectives has made him a sought-after advisor for organizations navigating digital transformation.

A recognized voice in the industry, Keith combines his deep infrastructure knowledge with AI expertise to help enterprises integrate machine learning and AI-driven solutions into their IT strategies. His leadership has extended to designing scalable architectures that support advanced analytics and automation, empowering businesses to unlock new efficiencies and capabilities.

Whether guiding data center modernization, deploying AI solutions, or advising on cloud strategies, Keith brings a unique blend of technical depth and strategic insight to every project.