When My $4K DGX Spark Arrived, It Revealed What the Cloud Had Been Hiding

When my DGX Spark showed up—a compact, powerful little system I expected to use for local inference work—I assumed the Virtual CTO Advisor would just work. After all, the same application had run smoothly on GCP for months. I had compute. I had storage. I had bandwidth. On paper, everything I needed was there.

But the first time I tried to stand up the application, something became obvious that I had never fully appreciated until I left the cloud:

Even with the right hardware, the system was missing an entire architectural function.

GCP had quietly been giving me decisions I didn’t realize were decisions:

-

Where to run workloads

-

How to enforce data locality

-

How to balance cost and latency

-

How to perform safe, policy-driven scaling

-

How to constrain execution to the right region

-

How to avoid egress penalties through implicit placement

Those decisions weren’t coming from Kubernetes.

And they weren’t part of model serving.

They were coming from a layer that sits between orchestration (2A) and runtime (2B)—a layer that the cloud implements implicitly but that doesn’t exist on bare metal. That missing piece is what eventually crystallized into Layer 2C: The Reasoning Plane in the 4+1 Model.

Once you see that layer, the rest of the architecture snaps into place.

And it finally clarifies the recurring confusion around vendors like VAST Data.

Why VAST Gets Misunderstood

(And Why the DGX Moment Makes It Clear)

Before the DGX experiment, I often found myself explaining to customers why VAST wasn’t an application platform or an orchestration system—even though the “AI OS” language sometimes suggests otherwise. VAST is a critical part of the AI stack, but its power comes from not trying to operate the layers above it.

The DGX Spark made this distinction concrete.

Even with world-class data performance behind it, the system still couldn’t run modern AI applications without the orchestration and reasoning capabilities that live above Layer 1. That’s not because VAST is lacking—it’s because the Data Plane cannot replace the Application Plane.

Once you map VAST to the 4+1 Model, its role becomes unambiguous.

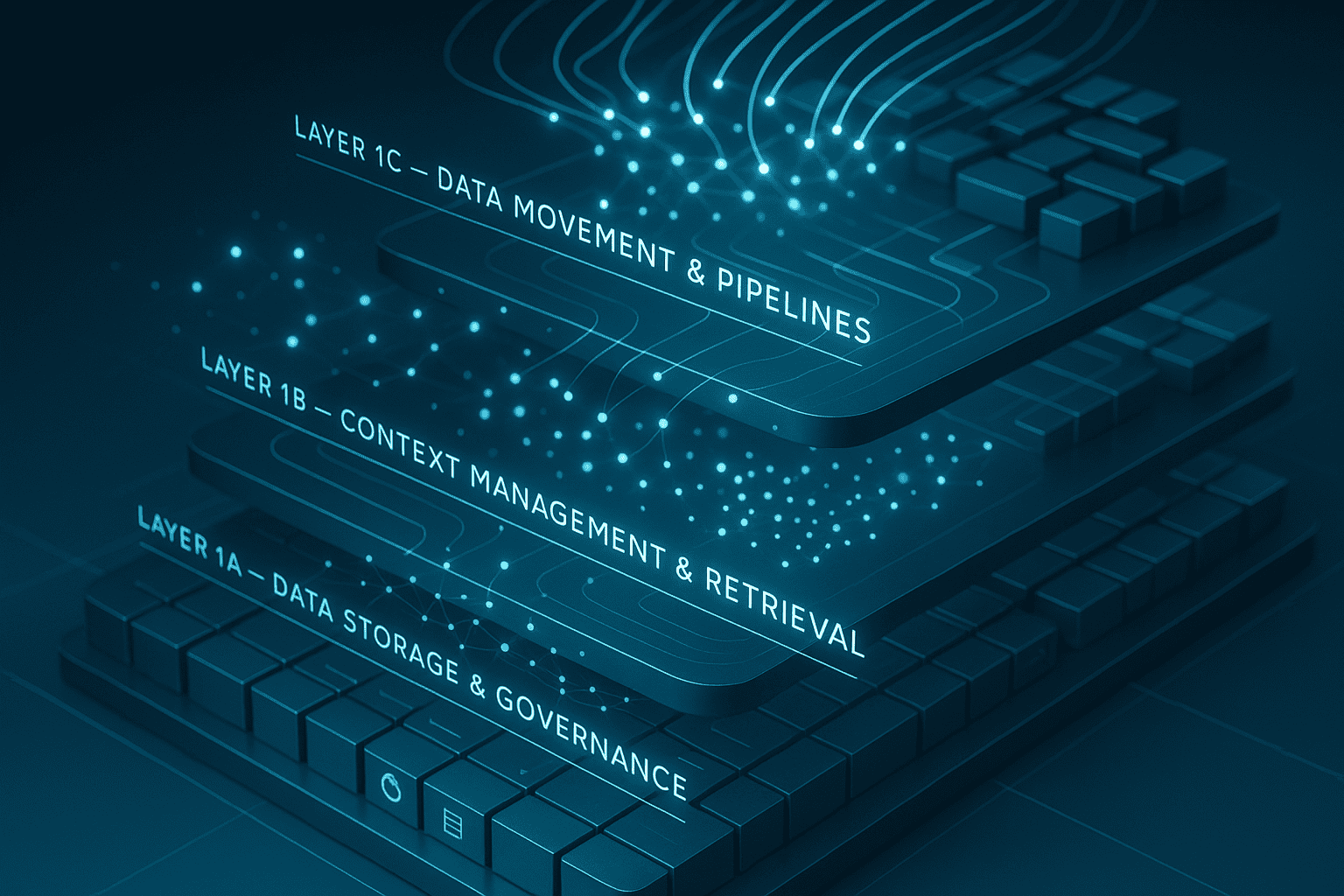

VAST Data Is the Entire Data Plane (Layer 1A + 1B + 1C)

Here’s what VAST actually does—and why it’s so important:

1A — Storage & Governance

VAST delivers a unified, high-performance storage layer with metadata, durability, and governance baked in. For many enterprises, this becomes the canonical place where AI-usable data lives.

1B — Context Management & Retrieval

This is VAST’s differentiator. Native vector search and semantic retrieval run next to the data, eliminating an entire class of ETL and reducing context-fetch latency for RAG.

1C — Data Movement & Pipelines

High-throughput ingest, streaming, and efficient data access patterns feed downstream runtimes without bottlenecks.

Put simply:

VAST is where your data lives, moves, and becomes retrievable with context.

It is not where your models execute or where your orchestration logic resides.

And that’s the point.

In a proper AI infrastructure, the Data Plane and Application Plane are not interchangeable. They complement each other.

The DGX Spark + VAST Thought Experiment

If you connect a DGX Spark to a VAST cluster, you instantly get:

-

GPU-ready throughput

-

high-speed retrieval

-

unified metadata

-

governed storage

-

vector search embedded in the data plane

This is an excellent foundation.

But your application still needs:

-

a runtime (Layer 2B)

-

GPU orchestration (2A)

-

policy-driven reasoning (2C)

-

agent frameworks and business logic (Layer 3)

The DGX moment makes that obvious.

The cloud hid Layer 2C.

VAST never claimed to deliver it.

And the 4+1 Model gives us the vocabulary to articulate exactly where the boundaries lie.

Why This Clarity Matters

Enterprises routinely blur these layers.

RFPs blur these layers.

Vendor claims blur these layers.

A storage vendor gets compared to a runtime vendor.

A vector database gets confused with a model server.

A GPU cluster gets mistaken for an AI platform.

A copilot framework gets treated as infrastructure.

The 4+1 Model is designed to stop that confusion.

VAST = Layer 1

Orchestration = Layer 2A

Runtime = Layer 2B

Autonomy = Layer 2C

Agents/Applications = Layer 3

Hardware = Layer 0

Clear boundaries make it possible to assemble a best-of-breed AI stack without accidentally over- or under-investing in the wrong layers.

That clarity started with a $4K DGX Spark sitting on my desk, refusing to run an application that worked flawlessly in the cloud.

Once I understood why it failed, the entire AI stack became legible in a way it hadn’t been before.

If you want to see how this model applies to your own environment, try the Virtual CTO Advisor Stack Builder. It uses the 4+1 framework to evaluate your workloads and produce a tailored infrastructure design you can share with your platform team.

Share This Story, Choose Your Platform!

Keith Townsend is a seasoned technology leader and Founder of The Advisor Bench, specializing in IT infrastructure, cloud technologies, and AI. With expertise spanning cloud, virtualization, networking, and storage, Keith has been a trusted partner in transforming IT operations across industries, including pharmaceuticals, manufacturing, government, software, and financial services.

Keith’s career highlights include leading global initiatives to consolidate multiple data centers, unify disparate IT operations, and modernize mission-critical platforms for “three-letter” federal agencies. His ability to align complex technology solutions with business objectives has made him a sought-after advisor for organizations navigating digital transformation.

A recognized voice in the industry, Keith combines his deep infrastructure knowledge with AI expertise to help enterprises integrate machine learning and AI-driven solutions into their IT strategies. His leadership has extended to designing scalable architectures that support advanced analytics and automation, empowering businesses to unlock new efficiencies and capabilities.

Whether guiding data center modernization, deploying AI solutions, or advising on cloud strategies, Keith brings a unique blend of technical depth and strategic insight to every project.