A CTO’s Guide to Migrating from VMware

Executive Summary

HPE’s new “VM Reset” offer—bundling HPE Private Cloud Business Edition with the Morpheus VM Essentials (VME) hypervisor—is a direct response to the post‑Broadcom VMware sticker shock. By swapping VMware’s per-core subscription for HPE’s per-socket licensing, customers are seeing real-world savings of 5–10×. One 32-server estate, for example, fell from US $461k to US $38k over three years.

This guide, adapted from The CTO Advisor’s async framework, walks through the real steps to vet and plan a transition—not just financially, but operationally and strategically.

1 | Structured Evaluation Framework

| Phase | Key Question | Deliverable |

| Situation & Objectives | What cost, agility, or risk goals matter? | Exec objectives, success metrics |

| Workload Segmentation | Which VMs are cost-heavy, change-tolerant, or mission-critical? | Tiering matrix, dependency map |

| Technical Fit & Gaps | Does VME hit parity for HA, live migration, ecosystem support? | Feature-gap log, mitigation plan |

| Financial Modelling | CapEx/OpEx delta, dual-stack overlap, server/storage reuse? | 3– to 5–year TCO |

| Risk & Mitigation | Product maturity, skills, support, vendor lock? | Risk register, exit criteria |

| Roadmap & Governance | Sequenced migration waves, KPIs, change cadence? | 18-month roadmap |

| Validation & POC | Pilot cluster, DR fail-over, perf baselines? | Go/No-Go sign-off |

Keith On Call subscribers get the full worksheet pack for each phase.

2 | Where the Money (and Value) Shows Up

| Benefit | Detail |

| License relief | VME at ~US $600/socket vs. VCF at ~US $350/core. Savings spike on 24– and 32-core CPUs. |

| Stack consolidation | Compute + Alletra MP storage + Aruba CX micro-segmentation under one control plane. |

| Multi-hypervisor runway | Run VME clusters beside vSphere; retire VMware on your schedule. |

| Modern data resilience | New HPE–Veeam image-based backup & replication—portable across hypervisors. |

| AI-ready GPU support | VME 8.x brings GPU passthrough & vGPU (SR-IOV) aligned with Gen12 AI servers. |

| Hybrid Cloud Note | Today, “VM Reset” is private-cloud only. If you’re running VMware Cloud on AWS/Azure, VME doesn’t yet support equivalent hybrid models. Plan accordingly. |

3 | Day‑2 Ops Reality Check

- Tooling – Morpheus replaces vCenter. Admins must adapt to a new UI, CLI, and API stack. Automation shifts from PowerCLI to Terraform/Ansible modules supported by Morpheus.

- Monitoring – VME exposes Prometheus endpoints; VMware-native observability (vRealize) doesn’t map 1:1. Baseline all alerting rules from scratch.

- Lifecycle & Patching – VME updates include kernel and KVM stack. You’ll need longer change windows and rollback plans, especially early in adoption.

- Networking – Micro-segmentation moves from NSX to Aruba switches. While Aruba delivers zero-trust segmentation, it is not a 1:1 replacement for NSX.

⚠️ Keith’s Field Insight: Integration Back-Out Risk

One of the most underestimated delays in VMware exits is compliance-bound integrations that rely on NSX.

In a typical NSX setup, firewall rules tied to a VM are automatically cleaned up from vCenter, NSX, and associated compliance workflows the moment that VM is decommissioned.

With Aruba today, there is no direct equivalent. That means you’re suddenly in the business of rebuilding these automation hooks—or manually backstopping them.

This isn’t just a security risk—it’s a compliance audit gap. And it’s a key reason why some teams delay migration by 6–9 months until network policy workflows are re-engineered.

🔍 Keith’s Field Insight: API Drift

If you’ve written custom provisioning tools or CMDB integrations that rely on vSphere APIs, expect to re-architect. Morpheus won’t replicate every hook—and that gap will show up fast in compliance, reporting, and automation pipelines.

4 | Risk Assessment & Mitigations

| Risk | Likelihood | Impact | Mitigation |

| Feature gaps vs. vSphere (DRS, agent-less backup) | Medium | Med–High | Pilot Tier 2 workloads first; use agent-based backups until GA |

| Ecosystem depth (ISV, monitoring, security, automation tooling) | Medium | Medium | Validate vendor support; use Morpheus plug-in SDK; audit custom vSphere API usage |

| Skill shortage | High | High | Budget HPE PS or internal up-skilling; cert classes at HPE Discover. Expect to allocate 10–15% of projected license savings to training support. |

| Vendor lock-in | Medium | Medium | Keep a dual-stack exit clause and open-API tooling |

| Migration downtime | High | High | Veeam replication + Morpheus live-migration for near-zero cut-over |

5 | Mission-Critical Workloads Strategy

- Classify workloads by RTO/RPO and SLA.

- Run Dual-Stack – Keep Tier 1 on VMware for 6–12 months; pilot Tier 2/DevTest on VME.

- Validate HA, perf, and fail-over under production load.

- Regulatory Carve-outs – If an ISV demands “VMware-only,” ring-fence a cluster.

⚠️ Keith’s Field Insight: The 10% That Break the Plan

In every VMware exit I’ve seen, about 10% of apps require VMware-specific features. But those 10% can cause 80% of the pain.

If you keep a small “legacy” vSphere footprint just for those workloads, you may spend as much on that cluster as you do on the full HPE VME deployment.

Your mitigation options: ring-fence, replatform, or renegotiate with ISVs. But whichever you choose—do it before you claim 90% cost savings.

📅 Migration Wave Example

- Months 1–2: Assess + pilot Dev/Test

- Months 3–6: Migrate 150 Tier 2 VMs

- Months 7–12: Stand up dual-stack DR + evaluate Tier 1 workloads

- Month 12+: Retire legacy VMware clusters based on ISV carve-outs

6 | Disaster Recovery & Business Continuity Integration

- Backup/Restore – Veeam Data Platform enables image-based backups with support for cross-hypervisor restore.

- Replication – Near-sync replication to a secondary site via Veeam or Zerto. Orchestration tools must be validated.

- Fail-over Testing – Bi-directional fail-over (VMware → VME and back) must be validated in POC with real app load profiles and live network policies.

- Segmentation Alignment – Aruba-based segmentation policies must be coordinated across primary and DR sites to avoid drift.

⚠️ Keith’s Field Insight: “Quick Wins” Are Rare in DR

I’ve never seen a DR playbook “just work” during a major platform shift—especially in large or regulated environments.

DR testing isn’t a checkbox—it’s a multi-month process involving coordination across storage, networking, identity, observability, and app teams.

On paper, your replication tools may say, “We support VME.” In practice, the second you touch IP ranges, storage paths, or DNS, you’ll break your DR plan. Expect to rebuild.

🛠 Keith’s Note: One team I worked with spent 3 months rebuilding DR runbooks because their backup vendor didn’t support VME metadata hooks. Don’t assume your orchestration tool speaks both hypervisors fluently.

7 | Resource & Timeline Estimate

| Workstream | FTE-Months | Notes |

| Discovery & Assessment | 8 | Inventory, dep-mapping, TCO model |

| Pilot Build | 4 | 3-node dHCI + Alletra MP + Aruba CX |

| Migration Factory (400 VMs) | 24 | 25–30 VMs/week incl. QA |

| Training & Enablement | 4 (ongoing) | HPE/Morpheus certs |

| DR Re-engineering | 4 | Re-point backups, test fail-over |

Budget Warning: You will run dual licensing/support for 12–18 months. Don’t claim 90% savings until that burns off.

8 | AI Infrastructure Ripple Effects

- Budget Release – License savings (~US $400k) can fund GPU clusters or AI appliances.

- GPU Scheduling – VME GPU features trail vSphere. Keep inference workloads on VMware until VME matures.

- Data Gravity – If AI pipelines sit on vSphere-backed NFS/VMFS, migrate data before compute.

- Team Bandwidth – Don’t let VM migration waves collide with critical AI MVP delivery.

9 | Recommended Next Steps

- Download Keith On Call worksheets for phases 1–3.

- Launch a 10-VM POC cluster to validate key features.

- Refine the financial model, including overlap costs.

- Book a red-team async session via Keith On Call to pressure-test assumptions and briefings.

🎯 Keith’s Take

This isn’t just a licensing decision—it’s a platform shift. Treat it like a spreadsheet exercise, and you’ll get burned in day-2 ops.

But if you scope it like any major infra change—with DR, skills, observability, networking, and board comms aligned—you can absolutely unlock the promised savings without jeopardizing uptime or momentum. That’s what I help teams do—before the first POC node is even racked.

🔑 Executive Summary Revisited

- Budget relief is real—but only after 12–18 months of dual stack.

- The 10% of “VMware-only” apps create 80% of the migration drag.

- DR testing will take months, not weeks—plan around it.

- NSX replacements are not plug-and-play—compliance teams must be engaged early.

- VME’s maturity for GPU/AI workloads is still catching up—don’t overpromise to stakeholders.

📣 Ready to Sanity-Check Your VMware Exit?

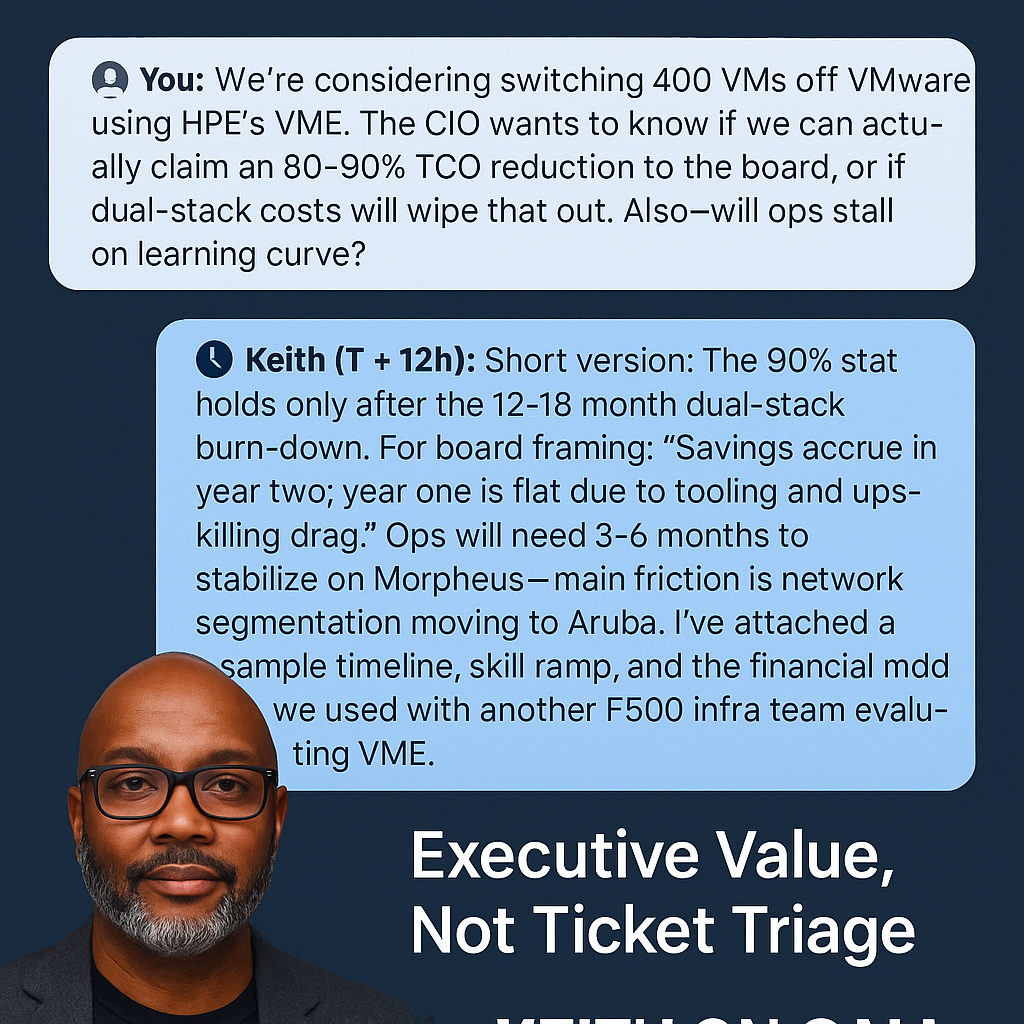

Keith On Call gives you direct async access to Keith—so you can:

- Validate assumptions before you present to the board

- Pressure-test cost models, migration timelines, and risk plans

- Ask the questions vendors can’t (or won’t) answer

🧠 You bring the context. Keith brings 25+ years of hard-won judgment.

🕐 Turnaround: 12–72 hours depending on tier

🎯 No retainer. Just high-trust, high-impact feedback when it matters.

Share This Story, Choose Your Platform!

Keith Townsend is a seasoned technology leader and Founder of The Advisor Bench, specializing in IT infrastructure, cloud technologies, and AI. With expertise spanning cloud, virtualization, networking, and storage, Keith has been a trusted partner in transforming IT operations across industries, including pharmaceuticals, manufacturing, government, software, and financial services.

Keith’s career highlights include leading global initiatives to consolidate multiple data centers, unify disparate IT operations, and modernize mission-critical platforms for “three-letter” federal agencies. His ability to align complex technology solutions with business objectives has made him a sought-after advisor for organizations navigating digital transformation.

A recognized voice in the industry, Keith combines his deep infrastructure knowledge with AI expertise to help enterprises integrate machine learning and AI-driven solutions into their IT strategies. His leadership has extended to designing scalable architectures that support advanced analytics and automation, empowering businesses to unlock new efficiencies and capabilities.

Whether guiding data center modernization, deploying AI solutions, or advising on cloud strategies, Keith brings a unique blend of technical depth and strategic insight to every project.